I've been thinking a lot about interactions and interaction design lately and now I'm finally forcing myself to sit down and hash out some of my thoughts. I really got moving on this path in February when I started working on Arduino, Leap Motion and Processing projects.

I had previously had ideas and thoughts about interactive sculpture and other interactive media projects but hadn't followed through. A lot of the inspiration for the most recent push came from the 100 days of hustle but another big piece was hearing this Jack Dorsey talk. The part that really stuck with me was when he talks about doing whatever it takes to manifest the things you want to see in this world.

One of the things I've been thinking about for years is an immersive, interactive space: A room that collects information about each member of the audience in the room and the art is created accordingly (in my head it's a blend of projection, music and other moving elements). The piece could also influence the behavior of the audience through projections, sound and light to create a two-way street of influence and some interesting feedback loops. This project will be years away for me, but it is definitely something I would like to build, so I'm trying to push as hard as I can in that direction.

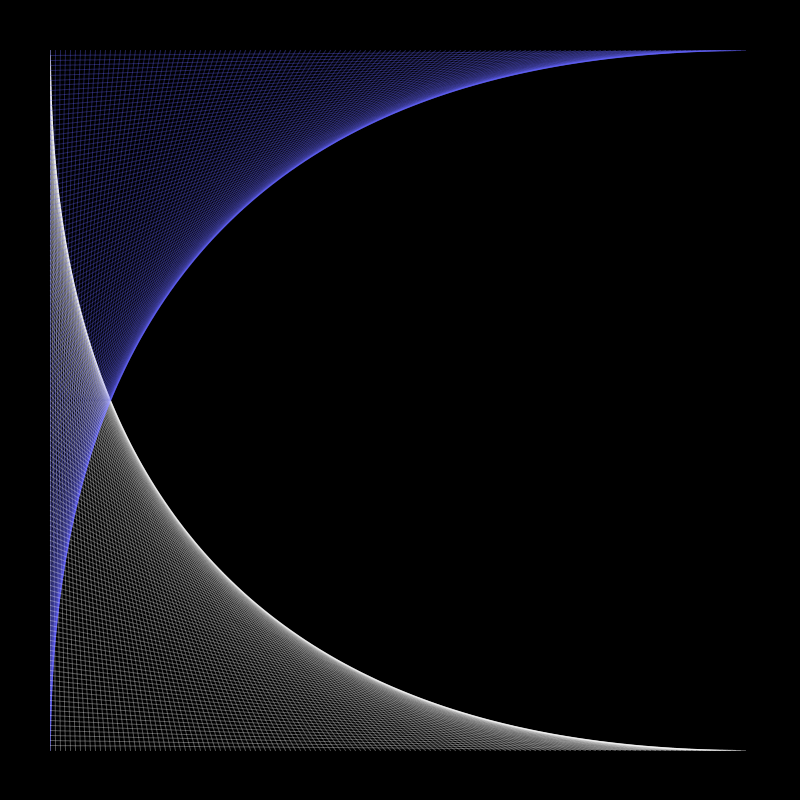

The Arduino/Leap Motion/Processing projects have been the first step towards that goal. So far, in the short time I've been playing with them, I've come up with a ton of new ideas, and can see how things I found fascinating before fall under this umbrella.

One of the things I find so interesting about interactive media is how it plays with the roles of actor and observer. I love the simple question, "am I influencing the actions of the art or is the art influencing my actions?" I guess this is the case with all art, but I think it is particularly tangible with interactive and kinetic pieces. I love the idea that the audience has no choice but to participate.

One of the reasons I want to explore the physical-digital-physical interaction chain, particularly with motion control, is that I think we are fairly distanced from these interactions in the real world. For example, a light switch is a physical-electrical-optical interaction but feels so simple, so natural because we’re used to it. The on/off binary is simple, so why think about the chain of events that creates the on- or off-state?

I want to create ‘unnatural’ interactions that can be learned through play and intuition. One thing that I think many current physical-digital-physical interactions lack is adaptivity and creative decision making. Usually it’s just binary on/off. I want to build things that take (and give) more than that.

Working with the Leap has got me to start thinking about gestures and what sort of interactions make sense for interactive control. Making a motion controlled menu for my Tether drawing program was very difficult because precision becomes an issue due to hand/finger motion. I ended up scrapping the motion/gesture controlled menu for a mouse controlled GUI.

To get around this, the program needs to be smarter. For example, there could be a gesture to indicate that you want to use the menu and then the program enters menu mode. In menu mode, it has a cursor that moves towards your hand/finger but doesn't appear exactly at your finger position to allow for sensitivity. Essentially everything has to be re-thought the input/controls are different. Though it can’t be too different or people won’t be able to learn/understand it.

What would GUI's and interfaces look like if we didn't go through the age of the mouse? What will the future look like? Is it minority report? or something else? What else is needed?

Another thing I wonder about, especially with motion tracking like Leap/Kinect is that it doesn’t have the start/stop infrastructure that a mouse has (i.e. you take your hand off to stop). I’ve tried to mimic this by using depth (towards screen) to create a threshold (behind - action doesn’t occur, past - action occurs) but I’m not sure this is the way to go and there may need to be a physical indicator of the interface. It may make sense to pair the leap with an actual physical controller/switch that allows the user to change modes or have a gesture that only gets picked up by one mode.

As things progress, the physical controller might be replaced by something like a certain posture, nod, or even a vocal command. I think in the future there will be developers/designers that build custom interfaces for people. Essentially they would act as technicians/consultants that go learn about a person's behaviors/movements and customizes/configures the controller just for them. With 3D printing and motion capture, this will make for some highly adaptable systems.

There is so much information coming through a devices like the Leap Motion controller and I wonder, what parameters are going to be used and passed through to make an effective user experience? Will that be the same for every person? Could the interface learn a person's preferences and adapt to suit their needs? (i.e you say a command and do a gesture, and eventually you don’t need to say the command because system has learned the gesture). What about multiple users? How do they work together with different gestures? Would they each need to have their own set of gestures?

There are so many interesting questions which is why I’m so inspired by this field. There seem to be so many things to experiment and play with and a lot of space to grow and develop.